On November 1st, 2023, the Right2YourFace Coalition – a group of prominent civil society organizations and scholars – sent the letter below to the Minister of Public Safety, the Minister of Innovation, Science and Industry and other affected parties stating that the new proposed government legislation for privacy and AI falls short and will be dangerous for Canadians.

Please take action to protect people in Canada from facial recognition technology!

Joint letter on Bill C-27’s impact on oversight of facial recognition technology

Dear Ministers,

As Bill C-27 comes to study by the Standing Committee on Industry and Technology (INDU), the Right2YourFace Coalition expresses our deep concerns with what Bill C-27 means for oversight of facial recognition technology (FRT) in Canada.

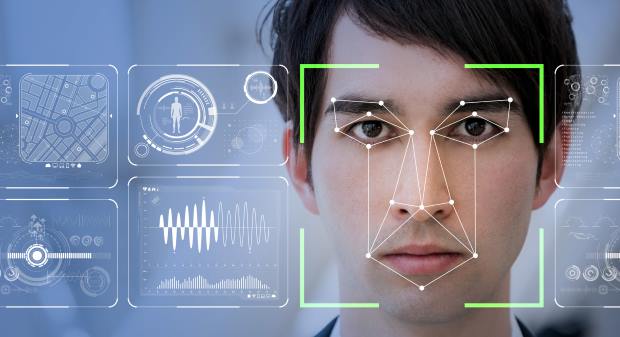

FRT is a type of biometric recognition technology that uses artificial intelligence (AI) algorithms and other computational tools to ostensibly identify individuals based on their facial features. Researchers have found that these tools are about as invasive as technologies get. Biometric data, such as our faces, are inherently sensitive types of information. As mentioned in our Joint Letter of Concern regarding the government’s response to the ETHI Report on Facial Recognition Technology and the Growing Power of Artificial Intelligence, the use of FRT threatens human rights, equity principles, and fundamental freedoms including the right to privacy, freedom of association, freedom of assembly, and the right to non-discrimination. AI systems are being adopted at an increasingly rapid pace and Canada needs meaningful legislation to prevent the harms that FRT poses. As it stands, Bill C-27 is not that legislation – it is not fit for purpose and is in dire need of significant amendments.

Bill C-27 is comprised of three parts and our concerns lie primarily with two of them: The Consumer Privacy Protection Act (CPPA) and the Artificial Intelligence and Data Act (AIDA). The CPPA creates the rules for data collection, use, and privacy that flow into implementations covered by the Artificial Intelligence and Data Act (AIDA). While implementations like FRT are the target of AIDA, the datasets FRT systems rely on must be collected and used under the terms of the CPPA. Consequently, we submit that both CPPA and AIDA require amendments to fully protect vulnerable biometric information.

We have identified five core issues with the Bill, including elements of both the CPPA and AIDA, that require immediate attention in order to avoid significant harm. They are:

- The CPPA does not flag biometric information as sensitive information, and it does not define “sensitive information” at all. This omission leaves some of our most valuable and vulnerable information—including the faces to which we must have a right—without adequate protections;

- The CPPA’s “legitimate business purposes” exemption is too broad and will not protect consumers from private entities wishing to use FRT;

- “High impact systems” is undefined in AIDA. Leaving this crucial concept to be defined later in regulations leaves Canadians without meaningful basis from which to assess the impact of the Act, and FRT must be included;

- AIDA does not apply to government institutions, including national security agencies who use AI for surveillance, and exempts private sector AI technology developed for use by those national security agencies – creating an unprecedented power imbalance; and

- AIDA focuses on the concept of individual harm, which excludes the impacts of FRT on communities at large.

Biometric information is sensitive information and must be defined as such

Not all data are built the same, and they should not be treated the same. Biometric information is a particularly sensitive form of information that goes to the core of an individual’s identity. It includes, but is not limited to, face data, fingerprints, and vocal patterns, and carries with it particular risk for racial and gender bias. Biometric information must be considered as sensitive information and afforded relevant protections. While the CPPA mentions sensitive information in reference to the personal information of minors, the text of the Act neither defines nor protects it. This leaves some of our most valuable and vulnerable identifiable information without adequate protection. The CPPA should include special provisions for sensitive information, and its definition should explicitly provide for enhanced protection of biometric data – understanding that the safest biometric data is biometric data that does not exist.

“Legitimate business purposes” requires a better definition to protect against abuse

The CPPA states in provision 12(2) that purposes which “represent legitimate business needs of the organization” are appropriate purposes to collect user information without the user’s knowledge or consent. It is not difficult to see businesses framing their use of FRT as in service of legitimate business purposes like loss prevention, which is already happening in the private sector despite being established as violating Canadian privacy law. Disturbingly, the CPPA’s legitimate business purpose loophole tilts the scales in favour of business over personal privacy, suggesting that individuals’ privacy rights are less important than profit.

It should be demonstrably clear that a person’s rights and freedoms must be adequately balanced. Provision 5 of the CPPA states that the purpose of the Act is to establish “rules to govern the protection of personal information.” Businesses, thus, should not be given free rein to decide that their use of FRT—and the risks to privacy that come with the use and collection of that highly sensitive data—qualifies as legitimate and that biometric data could be collected without an individual knowing or consenting.

What is a high-impact system?

AIDA imposes additional measures on “high-impact systems”, requiring those who administer them to “assess and mitigate risks of harm or biased output.” Given that FRT and its associated AI systems have the ability to identify individuals using the above-mentioned biometric information, FRT must be considered high-impact. Yet, the Act offers no definition of what qualifies as high-impact, instead leaving this crucial step to the regulations.

The risk-based analysis associated with high-impact systems suggested by the wording in AIDA takes us down the wrong path. Would a grocery store’s coupon-distribution system be considered high-impact and thus require assessment and mitigation of risks of harm or biased output? What if that system were using FRT? What may seem to be a low-impact system of coupon distribution may in fact be incorporating and collecting biometric data. Given the risks and harms that FRT poses for human rights and fundamental freedoms, FRT’s impacts are both high and dangerous.

A rights-based analysis must accompany risks-based calculations. A definition of high-impact systems that includes FRT and other biometric identification technologies must be included in the bill itself.

National Security is absent from the Bill but must be addressed within it

Section 3(2) of AIDA states that the Act does not apply to a “product, service, or activity under the direction or control of” government institutions including the Department of National Defence (DND), the Canadian Security Intelligence Service (CSIS), the Communications Security Establishment (CSE), or “any other person who is responsible for a federal or provincial department or agency and who is prescribed by regulation.” In plain language, private sector technology developed to be used by any of these institutions is exempt from AIDA’s reach. Given FRT’s connection to broader surveillance and AI-driven systems, excluding the DND, CSIS, and CSE—three pillars of Canada’s surveillance infrastructure—from AIDA leaves room for gross violations of privacy in the name of state security.

Further, provision 3(2)(d) gives regulators the ability to exclude whichever department or agency they please any time after AIDA has passed. This runs counter to the notion of accountable government and creates the risk that other organizations and departments using or wanting to use FRT may escape meaningful regulation and public consultation.

Collective – not just individual – harm must be considered

While protection of individuals’ data is central to AIDA, Parliament must remember that AI in general and FRT in particular is built on collective data that may pose collective harms to society. FRT systems are consistently less accurate for racialized individuals, children, elders, members of the LGBTQ+ community, and disabled people – which is in direct conflict with C-27’s intent to restrict biased outputs. This makes the inclusion of collective harm in C-27 all the more necessary.

Final Remarks

The above-outlined issues are by no means exhaustive but are crucial problems that leave Bill C-27 unequipped to protect individuals and communities from the risks of FRT. While we agree that Canada’s privacy protections need to meet the needs of an ever-evolving digital landscape, legislative and policy changes cannot be made at the cost of fundamental human rights or meaningful privacy protections. Parliament must meaningfully address these glaring issues. Together, we can work toward a digital landscape that prioritizes privacy, dignity, and human rights over profit.

Sincerely,

Canadian Civil Liberties Association

Privacy and Access Council of Canada

Ligue des droits et libertés

International Civil Liberties Monitoring Group

Criminalization and Punishment Education Project

The Dais at Toronto Metropolitan University

Digital Public

Tech Reset Canada

BC Freedom of Information and Privacy Association

See the full list of signatories here.

Watch the press conference here.

Since you’re here…… we have a small favour to ask. Here at ICLMG, we are working very hard to protect and promote human rights and civil liberties in the context of the so-called “war on terror” in Canada. We do not receive any financial support from any federal, provincial or municipal governments or political parties. You can become our patron on Patreon and get rewards in exchange for your support. You can give as little as $1/month (that’s only $12/year!) and you can unsubscribe at any time. Any donations will go a long way to support our work. |